AI and Human Learning: What the Research Really Shows

AI is everywhere. And its use and capabilities are accelerating every day.

But is AI actually helping us get better at getting better?

Or is it just amplifying the friction, bottlenecks, and complexity that already exists in our workflows and processes?

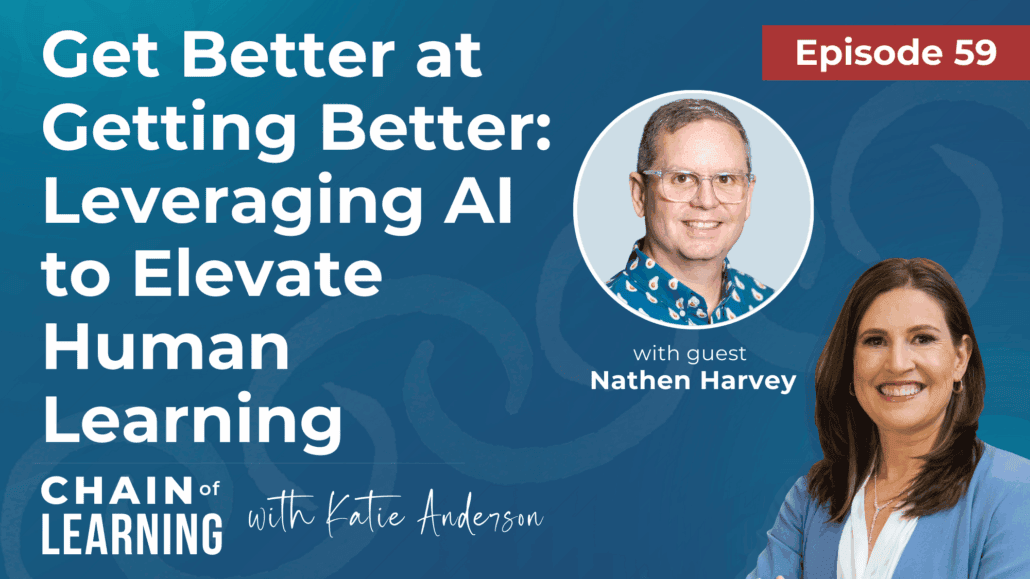

In this episode, Nathen Harvey, leader of the DORA Research team at Google, explores how AI is reshaping not just how we work, but how we can use it to elevate human work, collaborate as teams, and reach better outcomes.

Drawing on new findings from the DORA 2025 report on AI-assisted software development, we dig into what truly drives high performance—regardless of your industry or work—and how AI can either accelerate learning or amplify bottlenecks.

In this episode, you’ll learn:

✅ How AI accelerates learning—or intensifies friction—based on how teams use it

✅ Why AI magnifies what already exists, and why stronger human learning habits matter more than stronger tools

✅ The seven DORA team archetypes—and how to quickly spot strengths, gaps, and next steps for more effective collaboration

✅ How to use team characteristics to target where AI (or any tech) will truly move the needle and support continuous improvement

✅ How the Toyota Production System / lean principle of jidoka—automation with a human touch—guides us to use AI to elevate human capability, not replace it

Listen Now to Chain of Learning!

If you lead or work on any kind of team, tune in to discover how to use AI thoughtfully, so it supports learning and strengthens the people-centered learning culture you’re trying to build.

Watch the Episode

Watch the full conversation between me and Nathan Harvey on YouTube.

About Nathen Harvey

Nathen Harvey, Developer Relations Engineer, leads the DORA team at Google Cloud. DORA enables teams and organizations to thrive by making industry-shaping research accessible and actionable.

Nathen has learned and shared lessons from some incredible organizations, teams, and open source communities.

He is a co-author of multiple DORA reports on software delivery performance and is a sought after speaker in DevOps and software development.

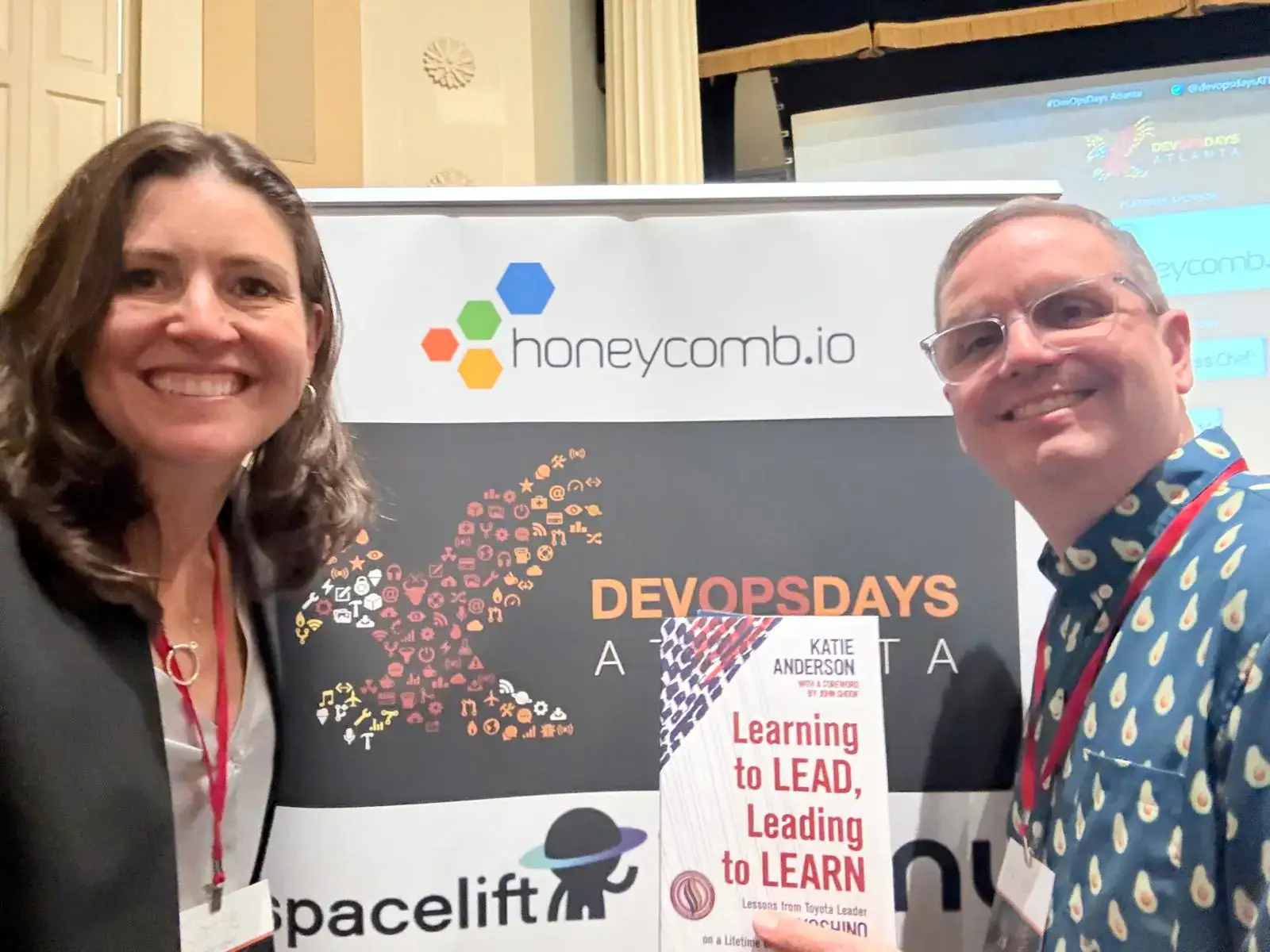

We met earlier this year at the DevOps Days, Atlanta, where he and I shared the stage to talk about learning, leadership and continuous improvement.

Nathen and I immediately connected on so many levels, especially around our shared passion for helping leaders and organizations accelerate learning and continuous improvement, and for translating research into practical application.

I also appreciate Nathen’s review and promotion of my book earlier this year on LinkedIn. See the screenshot below on what he found to be the most impactful.

DevOps Days Atlanta

As I mentioned, Nathen and I met at DevOps Days Atlanta earlier this year.

During Day 2 he gave a 5 minute talk called an “Ignite” talk.

You will want to watch it as he discusses the “origin story” of DevOps through the collaboration of Aerosmith and RUNDMC for Walk this Way.

Ignite Talk – DevOps: A Way of Being – Nathen Harvey:

I also gave a keynote presentation at DevOps Days Atlanta on Day 1 about how to create cultures that grow people and get results.

Drawing on real-world examples and my global leadership experience, including immersive time in Japan, I share in this leadership keynote how leaders create a Chain of Learning® by setting clear direction, providing meaningful support, and committing to their own development.

Watch the recording below to explore how to build a culture where people are empowered to think, learn from mistakes, and adapt—so that ultimately your organization can thrive not just in spite of change, but because of it.

Leading to Learn® – How to Create Cultures That Grow People and Get Results – Katie Anderson Keynote at DevOps Days Atlanta 2025:

Reflect and Take Action

One insight stood out clearly: AI is an amplifier. It doesn’t inherently make us better — it magnifies whatever is already present, whether that’s strong learning habits or existing friction.

The real power of AI lies in how we use it to enhance learning, not replace it. Drawing on the concept of jidoka—automation with a human touch—we explored how technology can support humans to think more clearly, see problems sooner, and focus on higher-value, more creative tasks.

When used intentionally, AI can accelerate learning and elevate human capability.

As you listen to this episode, reflect on:

- Where in your work are you using AI or other technologies? And are they truly helping you get better at getting better?

- How might you design your systems so that technology supports the speed of learning in problem-solving, not just the speed of delivery?

- How are you ensuring that the human touch remains at the center of your leadership, learning, and work with your team?

Take Nathen’s advice and have that human conversation with someone who might be introducing friction into your work.

Don’t start with the problem — start with connection.

When we lead with humanness, we create the foundation for true collaboration and improvement.

Connection is the bond between our links in our Chain of Learning. It’s what allows us to grow and create meaningful impact.

Important Links:

- Learn more about my coaching, trusted advisor partnerships, and leadership learning experiences

- Connect with Nathen Harvey

- Follow me on LinkedIn

- Learn more about DORA

- Join the DORA community

- Download my free KATALYST™ Change Leader Self-Assessment

- Episode 13 | 3 Ways to Break the Telling Habit® and Create Greater Impact

- Episode 40 | Escape the Doer Trap: 3 Simple Shifts to Instantly Get Unstuck

- Episode 44 | Master the Coaching Continuum and Become a Transformational Improvement Coach

- Episode 9 | The 8 Essential Skills to Become a Transformational Change Katalyst™

Listen and Subscribe Now to Chain of Learning

Listen now on your favorite podcast players such as Apple Podcasts, Spotify, and Audible. You can also listen to the audio of this episode on YouTube.

Timestamps:

03:04 – What DORA is and how it’s used as a research program for continuous improvement

04:31 – AI’s primary role in software development as an amplifier where organizations are functioning well and where there’s friction

05:53 – Using AI to generate more code in software engineering

07:03 – Danger of creating more bottlenecks when you try to speed up processes

07:44 – Importance of a value stream to understand the customer journey

10:41 – How value mapping creates visibility across silos so others see different parts of the whole process

10:55 – The process of gathering information for the State of AI Assisted Software Development report

12:20 – Finding seven team characteristics based on a survey of 5,000 respondents and learning how to leverage the results to improve performance

14:18 – Examples of several team characteristics and how it applies over various industries

16:33 – The negative impact of focusing on the wrong process that impacts the throughput

17:00 – Focusing at different types of waste to prevent undue pressure on people

17:51 – What DORA has found in having a tradeoff in having fast and stable production pushes vs. working slow and rolling back changes

18:50 – Three big things you need to improve throughput and quality

19:44 – Why the legacy bottleneck team archetype is unstable with elevated levels of friction

21:22 – Why harmonious high achievers deliver sustainable high quality work without the burnout

22:37 – How the report findings are being used to help improve organizations

23:42 – Seven capabilities of the DORA AI Capabilities Model in amplifying the impact of AI adoption to improve team and product performance

26:27 – The capability of executing in small batches to see the process through to fruition

28:52 – How to leverage AI to elevate human work vs machine work

30:58 – The benefits of AI in making new skills accessible, but does not make anyone experts in a specific skill

31:44 – Leveraging AI to help you complete tasks that would’ve taken longer

32:43 – Using AI to elevate creative thinking, but doesn’t replace your thoughts

33:56 – Ability to ask AI “dumb” questions to improve collaboration across teams

34:49 – Creating an experiential learning experience where there’s not a step-by-step path on how to reach outcomes

37:08 – Importance of collaboration when moving from point A to point B

37:35 – The difference between trainers and facilitators

39:03 – Using the DORA report to form a hypothesis for your next experiment in whether a process is working

39:55 – Two ways to start leveraging AI to accelerate learning

40:23 – Importance of using AI and learning through use

40:58 – Benefits of having a conversation with someone who introduces friction to your work

44:21 – The concept of jidoka in designing systems that empower humans to do their best thinking and work

45:22 – Questions to ask yourself as your reflect on the role of AI in your organization

Full Episode Transcript

Nathen: [00:00:00] And the AI can really help with that. I was talking with someone, a colleague, a, a, a little while ago, and they were telling me that they were learning this new domain and they felt super comfortable asking all of the dumb questions to the AI so that when they then went and spoke to an an expert in that domain.

They would have smarter questions to ask, and, and that collaboration could happen more seamlessly, and they wouldn’t feel like such a burden on the expert because they had done some due diligence. They’d ask some dumb questions, and the AI, of course, has infinite patience.

Katie: Welcome to Chain of Learning, where the links of leadership and learning unite.

This is your connection for actionable strategies and practices to empower you to build a people-centered learning culture, get results, and expand your impact so that you and your team can leave a lasting legacy. I’m your host and fellow learning enthusiast, Katie Anderson.

AI is everywhere right now in our tools, our workflows, [00:01:00] even our daily conversations.

And here’s the real question. Is AI actually helping us get better at getting better? Or is it simply amplifying the complexity and friction that already exists? In this episode of Chain of Learning, I sit down with Nathen Harvey, developer relations engineer at Google, and leader of the DORA Research team to explore how AI is reshaping not just how we work, but how we learn, collaborate, and grow as humans.

Nathen has spent his career helping teams realize their potential by aligning technology with human capability. He’s the co-author of the state of DevOps Reports and a champion for turning research into real world action. We met earlier this year at the DevOps Days, Atlanta, where he and I, along with past Chain of Learning guests, John Willis, shared the stage to talk about learning, leadership and continuous improvement.

Nathen and I immediately connected on so many levels, especially around our shared passion for helping leaders and organizations accelerate learning and [00:02:00] continuous improvement, and for translating research into practical application. In this conversation, we explore what it truly means to get better at getting better diving into the latest DORA 2025 report on AI assisted software development and what it reveals about how teams can use AI to amplify learning.

Accelerate feedback and improve outcomes. Whether you lead software development teams or any kind of teams, the lessons from this research go far beyond technology. You’ll gain insights into how to strengthen collaboration, reduce burnout, and use AI to elevate human potential, not replace it. We started off with a question that so many of us are asking right now with all the buzz about AI.

How can we make sure it’s actually helping us get better at getting better, not just move faster or do more work? I asked Nathen to share what he’s learning through the DORA 2025 report and how teams are really using AI and what makes a difference between amplifying learning [00:03:00] and amplifying friction.

Let’s dive in.

Nathen: DORA is a research program that’s been running for over a decade now. This research is, uh, it, it kind of looks into the capabilities and conditions that technology driven teams and organizations need to thrive. Well, it used to be an acronym, but we de acronymed the word a while ago. So DORA stands for nothing.

Uh, which sounds really bad when you say that. So instead I say that DORA, like, we live and breathe by our tagline, which is get better at getting better. Because it’s really a research program that looks into how do we help teams go on this journey of continuous learning and continuous improvement.

That’s what this is all about,

Katie: and that is the key theme of this podcast. It’s the Chain of Learning and how do we, you know, get better at getting better collectively and individually as well, and, and how do we leverage. Technology to enable our human learning to get better as well. So let’s explore some of the key findings of the, of the 2025 DORA report.

I think they’re super interesting. [00:04:00]

Nathen: Yeah. So our report is called the State of AI Assisted Software Development. Um, we are in that technology space and of course in the technology space, a as well as so many other spaces. Right now, AI is kind of, uh, I don’t know if it’s taking all the oxygen out of the room or it’s just that the hot topic that everyone is talking about.

And as a research program we. We really look into what’s what’s happening right now in the world. And so obviously we dug into AI pretty deeply there. I think the big sort of TLDR from the research is that AI’s primary role in software development is that of an amplifier. As an amplifier or sometimes we call it a mirror, also like a mirror or an amplifier.

We basically look at the way that organizations are operating and those are organizations that are operating well, where, where things like software, software changes can flow through the system and and make it out to customers very easily. AI is gonna amplify that. But if you, if you flip over to the other side and, and you [00:05:00] look at organizations where there’s a lot of friction, there’s a lot of pain in getting change delivered to your customers and things along those lines, AI is gonna amplify all of that friction and all of those pains.

And so I like to say that you kind of feel the pains of these disjointed systems. You feel that pain more acutely when you have ai. And one of the easiest ways to kind of think about this when it comes to software development. Is, you know, usually it’s a software engineer that sits down and writes code.

This is something that we wanna ship off to our customers. One of the steps along its journey into production is that another engineer comes along and does a code review. We do these code reviews so that we can improve that knowledge sharing about what does this code base do. We can also then use that as a feedback mechanism.

You know, maybe I would’ve. Implemented it slightly differently. Let’s talk about that. Or this is the practices that we have on our team. Let’s make sure you’re in line with those. But a lot of what software engineering is doing today is using AI to generate more code [00:06:00] so you can kind of see where this is going, right?

All of a sudden we’re generating an order of magnitude more code, but it all still has to go through a review process. So if your reviews were already a bottleneck. In many cases they already are, and what you do is you improve something that happens to the left of those reviews right before those reviews.

You’re generating a whole lot more code. We’re now trying to send more codes through the same bottleneck. That’s gonna hurt more. It’s gonna, it’s gonna slow the process down even further and potentially lead to not only slower deliveries, but also lower quality code that we end up shipping out to our customers.

So that’s kind of how we think about AI as this amplifier. It really amplifies the good and the bad in these organizational systems.

Katie: You can take that, extrapolate that to any type of work or industry. I mean, in the lean, lean world, we talk about creating pull rather than pushing work to the next, um, phase for that same reason, because then you’re just creating a backup [00:07:00] of inventory, which is waste and a bot.

You know, you need to work on the bottlenecks. So if you’re just like speeding up things and pushing it forward, it’s actually creating more. Waste and more challenges in the organization. So we really have to look at improving the entire value stream, which is something you talk about in the report too.

So maybe we can talk about that, uh, as part of this too, is that like you can’t just optimize a subsystem, right? You have to look at the interconnectedness across the whole value chain of creating that, uh, product or service and the value for the end customer.

Nathen: Totally. And our, our, our research and sort of the way we approach software development and the software delivery lifecycle takes a lot of lessons from Lean.

So it’s, it’s good that you’re reinforcing those lessons. We’re we’re taking them well. Right. And we do sort of really encourage organizations to do something like a value stream map to really understand, you know, from, from the idea. All the way through to a thank you from a customer. Like what does that process actually look like?

Or you could even, you know, [00:08:00] you can tighten up the aperture a a little bit, if you will, and think about like, all right, so I wrote some code. What are all of the steps that has to go through before that feature lands in production and a user can interact with it? And we, we find that both in our research and in practice, right when you do a value stream map.

With a cross-functional team, that mapping exercise reveals so many things every time, and it’s one of those things where. You know, going back to sort of our, both of our background in, in learning and development, it’s one of those things where I find, you know, I’m, I’m pitching this idea to a team, and sometimes the team will ask like, okay, well what’s the output of that value stream mapping exercise gonna be?

I’m like. We’re gonna find some waste and it’ll give you some ideas around what to go fix. Yeah, cool. But like, what are we gonna go fix? Like, what are we gonna do next after this? I’m like, I, I don’t know. We have to go through the exercise, we have to go through the mapping to understand that. And sometimes that leaves people a little uneasy.

I don’t know if [00:09:00] you’ve experienced that yourself.

Katie: Absolutely we, we are such a outcomes doing fix it driven culture that we want to just jump to the solutions, but the process of actually really understanding. The process is where we will have those aha moments and really understand where those bottlenecks are.

Right. And like you think about Gold Rats, the bottleneck is where we need to focus. You know, I was just at the Association for Manufacturing Excellence and and heard gold rat’s son, and he was talking about, you know, if we fix, you know, as you just said, the up the upstream process, that’s great. We’re doing it faster, but we’re still coming to that bottleneck.

It’s not doing, like we spent actually wasted time in improvement upstream when we really should be focusing on that bottleneck process. So it, we can’t amplify by whatever technology, whether it’s AI or you know, automating a system upstream if we’re still not working on how we’re looking at the whole system downstream.

Nathen: Yeah. Totally, totally. And like a, a good friend of mine, Steve Pereira, who does a lot [00:10:00] with value stream mapping and value stream management, he taught me the, uh, what I think is one of the most important phrases about value stream mapping. It’s the mapping, not the map. That’s important, right? Yes.

Katie: It’s. The

Nathen: process.

Process, it’s the activity of coming together and telling and finding those stories and yeah, sure, print the map afterwards and, and show it to a new hire who’s coming onto the team. But they, you have to understand that they will not get out of that map what you got. During the process of building the map, right,

Katie: and the process of the collaboration.

I mean, I remember we, I did a lot of value stream mapping, gosh, 15, 20 years ago when I was working internal to healthcare systems and the ahas of, you know, a doctor seeing what actually happens in other parts of the work. And so it’s like it creates visibility across. Silos, which is so critical. So

Nathen: yeah.

Katie: Alright, we’re diverging on, on value stream mapping. I wanna go back to, uh, AI and the results of the DORA report.

Nathen: Yeah. So our report, let me, uh, back up a little bit, a little bit about our methodology. So we do a couple of [00:11:00] things. One thing we do is we go and we run a giant survey and we try to get, uh, input from as many different technology driven organizations as possible.

And just so you know. Every organization is a technology driven organization, right? So every type of organization around the world, uh, in every industry vertical. And we were fortunate enough this year to get nearly 5,000 survey respondents. And so that gives us a good bit of data. And one of the things that we did across those 5,000 survey respondents was we kind of wanted to understand like, what are some of the things that are.

Common across those teams, and we looked at a number of different characteristics or outcomes that we actually care about. Things like. Team performance, product performance, software delivery, throughput and stability, individual effectiveness. How much time do you report spending doing valuable work? Uh, how much friction do you experience in a day?

And how much burnout do you have from your work? So we take all of these characteristics together and we ran a cluster analysis [00:12:00] across the data. And what that allowed us to do was identify these kind of. Profiles or archetypes of teams that we see in the data, and each one of these archetypes or profiles is like within that cluster.

They’re very similar across those different attributes that I just listed out, but across each one of those clusters, like each cluster is distinct. So there’s clear, this team looks very similar and, and so we ended up with seven different clusters. Now, one of the hardest problems in research and computer science is naming things.

And so we, we gave them each two names. First is just a nu numerical name. So clusters one through seven. Uh, but that’s kind of meaningless and, and hard to keep track of. So then we give them more descriptive names. So as an example, clusters like foundational challenges or the legacy bottleneck or harmonious high achievers.

Right? And the idea here is that. As a team, you can sort of sit down and have a conversation or do some sort of [00:13:00] assessment across those seven or eight different characteristics that I listed and understand where do I fit within these team profiles. And the beauty of that is you can then find where are weak spots.

Because, ’cause again, just like thinking about a value stream map, if what you find is that burnout is pretty good, but not great, right? It could be better on your team, but product performance is really, really low. If you go and focus on burnout, you’re probably gonna improve the outcomes for the individuals on the team.

But if you’re not improving product performance, which is maybe your biggest weakness, that’s gonna potentially have longer term negative effects, right? You wanna improve product performance. You keep having customers. If you have no customers and your team is not burnt out, uh, that’s good for your team until there are no more customers, and then they.

They can’t be a team anymore.

Katie: Let’s, let’s dive into some of those characteristics of the teams to give some examples for people. I think maybe they can think about how they relate, whether or not they’re in software [00:14:00] development or other teams, because I think a lot of these qualities that you were assessing in teams really can be applied.

Kind of, regardless of what the actual work is that people are doing.

Nathen: Totally. I think it, I, I think some of them are kind of universal and others, you know, you probably want to tune them a little bit to your domain, right?

Katie: Yep.

Nathen: But as an example, team performance, that’s probably something that is universal.

Uh, when we evaluate team performance, we look at things like, is your team able to innovate? Is your team able to deliver what they say they’re going to deliver? Like, are they delivering on their, their promises that. That applies obviously in software, but it also applies in, in, in the healthcare domain, right?

As an example, right? It, it, it applies everywhere. Um, burnout and friction, these are individual things. Obviously they apply regardless of where you are, the time that you spend doing valuable work, maybe balanced against how much time are you spending doing. Toil some work or encountering that friction.

Obviously everyone wants to improve valuable work. There are some things [00:15:00] that are very particular to the software delivery domain or the software domain. We talk about software delivery throughput and software delivery, stability, and that’s really a measure of how much. Change, software change. Can we move through the system and when we do like ship a change into production, how stable is that change?

Does it, do we have to roll it back right away? So while those two in particular are clearly about software. You can probably expand or contextualize them into whatever domain you’re in.

Katie: Right. Well, when I was looking at the report, uh, that you sent to me, I was thinking about how could this connect with other industries?

And so like one is more of like the speed at which you’re able to deliver work, the throughput of your work, whatever it is from A to B, that from passing it forward. And then, you know, the, the other one is really the quality of the work. So are you having to, is rework coming back to you because you pushed through?

You know, not as good or as you said, stable, you know, quality, but it could be whatever the quality is. So it’s sort of the throughput of your work [00:16:00] as well as the level of quality. And are you not pushing defects? Forward. Um, so how are you ensuring that higher level quality, so really just things for people to be thinking about in your own work about how these relate in your domain as Nathen had said.

Nathen: I, I think it’s also really important, um, that you sort of take as holistic a picture of team performance as you can, right? You could, you could imagine a place where a team only cares about, say the throughput of the work. That how, like how much work can we deliver? That’s the only thing that matters. But the reality is if that’s the only thing that you are focused on.

You may end up burning out the people on your team and, and so over the long term, that will have an impact on your throughput. Right? We aren’t trying to build humans that are just machines that are just delivering work, delivering work, delivering work. Right. Oftentimes, especially in knowledge work, that that also minimizes the amount of engagement that I have.

And, and, and why is it important that it’s a human that does that work versus, say, a computer or some automated process?

Katie: Absolutely. And, and for my [00:17:00] listeners too, like, you know, if we think about the, the pillars of the Toyota production system we have just in time and built in quality, right? And so they both has to be balanced.

And then if we look at the different types of waste, it’s not just muda the Japanese word for like the, you know, rework and inventory and all that, but it’s also, you know, like are we level loading the work so we’re not creating burnout for people and putting that undue pressure on them. So we, uh, we have to look at it.

Holistically, whatever terms you’re using to look at it, but it, we can’t optimize one area, then you’re sub-optimizing the rest. So, um, and then sometimes I’ve found too, if you only focus on like, what, that like, we’re focusing on speed, so then we don’t care about quality and this is what was happening.

Like it’s, if we’re just judged on the number of widgets we’re pushing forward or whatever it is, doesn’t matter what their quality is, you know, that’s all I’m getting. Graded on my performance. So we really have to be careful on sort of what we’re telling our teams, like the, the most important thing is

Nathen: totally.

And, and in the, in the software domain. One of the things that DORA has found over the decade plus that we’ve been doing this research, you know, we often think about [00:18:00] exactly what you said there. We can either be fast or we can have quality. It’s, it’s kind of a trade off, right? But what DORA has found in the software domain at least, is that these two things tend to move together.

So either you’re fast and have stable production pushes, or you you work very slowly and you’re doing a lot of rework and rolling back changes very often. Uh, basically every time you push out a change. And of course, one of the. Clear insights that we see. The teams that you know, first, it’s not a trade off, they move together.

And one of the best ways to improve both throughput and the quality is to work in smaller batches. Again, leaning on low,

Katie: ha ha ha,

Nathen: lean, ha ha. Sorry for the pun there. Yeah,

Katie: no, it’s right. It’s true. As I batching a lot of bad quality. You can fix it better if it’s smaller and also then, you know, make those adjustments.

So

Nathen: yeah.

Katie: Yes.

Nathen: Yeah, and we talk in DORA terms like we talk oftentimes around these three really big things that you need. You need to have a climate for learning, you need to have fast flow, and you need to have fast [00:19:00] feedback. Those three sort of ingredients are the ways that you improve in, in our case, software delivery performance.

Now, each one of those ingredients is really a category of ingredients that we break down into smaller capabilities as well. But again, that. Climate for learning fast flow and fast feedback. That translates to just about any industry, any profession that you’re in.

Katie: You know, I was really interested the qualities of these different seven team archetypes you came up with and like, you know, maybe we could explore like the, the one you called foundational challenges group and what they are.

And then I think that was on one end and then there’s, you know, the other end, which is that high performing team and like what are some of the differences between those types of teams in terms of the individual and team characteristics?

Nathen: I’ll take two of them. The, the legacy bottleneck, which is cluster two and, uh, harmonious high achievers, which is cluster seven.

Right. So in the legacy bottleneck, what we see when we look at the data and like to be very clear, we, we kind of tell a narrative around what we’re seeing in the data and, and [00:20:00] it probably resonates with these teams, but the legacy bottleneck team is a team that’s kind of in a constant state of reaction.

There are unstable systems that are dictating their work. And undermining the morale. So product performance in this case is pretty low. The team might be delivering regular updates, so like their throughput is is not terribly low, but their stability is. So, in other words, they’re shipping a lot of changes, but then they’re.

Rolling back those changes and having to rework those changes that they’re doing quite frequently. And so that’s not very good. We see elevated levels of friction and burnout on the team, so it’s not really a sustainable team from a human perspective. And these significant and frequent challenges that we see with the stability of the software and its operational environment are leading to that high volume of unplanned work.

That’s very reactive. So I don’t know about you, Katie, but I’d rather not be on this team.

Katie: No, it sounds terrible.

Nathen: Yeah.

Katie: Although I think we [00:21:00] probably all have had some experiences of that, so it’s like let’s avoid that at all costs.

Nathen: Yes, yes. Yeah. This is the other thing that’s true is like no team is static, right?

And, and no individual experiences static, right? You’ve had maybe multiple, you’ve worked on different teams, you’ve worked in different organizations, and sometimes it’s the same organization. Over time, you can feel. All of these things, but if we contrast that sort of legacy bottleneck with these harmonious high achievers, we call them, this is kind of, you know, we look at the data, we’re like, oh, this is what a really good or greatness looks like.

It’s a virtuous cycle where we see stable, low friction environment, really empowering the teams to deliver high quality work sustainably and without. Burnout. So team performance, product performance, software delivery, throughput, time reported spending, doing valuable work. All of these things are very high, where you have burnout, friction, and software delivery instability very low.

And so that’s, that’s really good. We feel like there’s a stable, or what we see in the data, right, as a [00:22:00] stable technical foundation that supports both the speed and the quality of the work, while also supporting the workers. Individuals that are there.

Katie: So it’s like, how do we then have some interventions to, to move from these, like lesser performing to the higher performing.

Nathen: Exactly. That’s, that’s always a question that we want because of course the research, like the important, well, the research looks into what’s sort of the state of. To understand how are teams doing today, but the more important research is how do we take those that state of and these lessons and apply them so that teams can improve and move closer to that harmonious high achiever state, if that’s where they wanna head.

Katie: What are some of those practices that you’re exploring and, and helping organizations with to take this, the findings and do something with it?

Nathen: So one of the things that we looked at this year, you know, AI as I mentioned, is a big area of focus. One of the things that we see is almost everyone is using AI professionally, [00:23:00] and yet we see these varied levels of team performance.

So that led us to this question of like, maybe it’s not that you’re using ai, but rather how. You’re using AI that’s actually driving those good or better outcomes that you care about. And so we investigated a bunch of different capabilities and conditions that we think together with AI are really gonna help with those.

And what we ended up with was the thing that we called the DORA AI capabilities model. These are things that amplify the impact of AI adoption on things like team performance and product performance and friction. Well, reducing friction. We wanna amplify the reduction of friction, just to be very clear.

But so we, we identified seven capabilities. We started off our research with, I think, 15 hypothetical capabilities, and then we looked at all of the data, uh, and, and we landed on these seven. The first. And, and many of these I think apply regardless of if you’re using AI and software development or, or, or using AI anywhere.

The first is a clear and [00:24:00] communicated AI stance. What we know is that many organizations are unclear about how and when and where, and if you should be using ai, and when that, when there’s ambiguity there, it slows down innovation and it increases friction. You know, as an individual I might. Play with AI on the side, and then I come into work and I’m not sure if I’m allowed to use it, but boy, it was fun over there on the side, so I’m gonna use it here, but am I gonna get in trouble for using it?

I don’t know if I’m allowed to. Right? And so all of that ambiguity really introduces friction. And like I said. Prevents, uh, some of that innovation that you might get. The next capabilities are kind of related, a healthy data ecosystem and giving AI access to that internal data. And like we experience this as humans as well, right?

If you need to find some data. Within your organization, if it’s really hard to do so, uh, that, that sucks, right? It, it’s hard for you to make the right decisions and it, and [00:25:00] maybe, maybe it’s hard to find because the data is siloed and there are ownership controls over that data and you don’t have access to some of it.

The same is gonna be true for an, an artificial intelligence that’s trying to help you, you know, apply the right policies, make the right decisions based on data. So that’s important. Then we have some technical practices, uh, one that is strong version control practices, and this is probably the one that’s most closely related to the software domain.

But version control is basically, well, I, I mean you experienced version control. Katie, whether or not you know it, uh, if you use Google Docs

Katie: Oh yeah.

Nathen: You can go back and look at previous versions of that Google Doc. Contrast that with what we used to do was ship around attachments of documents and emails and you end up with a document that’s like podcast outline underscore Katie underscore.

Nathen underscore Finalco. October final two. Yeah, exactly. Right.

Katie: Final three, final

Nathen: four. So we’ve moved away from that. But in software that becomes really important because [00:26:00] having those strong version control practices really it enables us to do a couple of things. The first thing it enables us to do is to roll back to a good working state if something bad gets introduced.

And then one of those practices also is what we call checking in. So like committing the changes to version control so that you can roll back to that previous version. And when you, when you commit those changes that are very small. Then you can easily just step back if you need to. The next capability is working in small batches, which we’ve already talked about, but it turns out that when you pair a human and an artificial intelligence together, yes, the AI maybe can generate something giant for you very quickly, but that’s not gonna lead to a really great outcome.

It’s even better at getting a small task and executing on that small task and then seeing that through to fruition. Right. Just just like we are. Another one is a user-centric focus. As technologists, we oftentimes use new technology because new technology, [00:27:00] uh, but we shouldn’t do that. We should remember that we’re building, like the reason we have this technology is to serve a user, like to provide value to them.

And then finally, the last capability is what we call a quality internal platform. So a platform is like, you know, shared capabilities within an organization that multiple. Parts of that organization can use without that shared platform. Oftentimes you might see like this department over here is getting a lot of value out of ai and that department over there is really struggling.

When you have a shared platform, you sort of level the playing field and allow them both to succeed.

Katie: That’s great. So there’s, for listeners out there leading a team or working in a team, how can you use some of these domains and qualities to help you think about what do you need to be using AI and actually any new technology really?

To be the most effective in your team, in your own work, and how can you get better at getting better, which is, you know, the, the whole, the whole point of, uh, the DORA report to give information on how to get better. Uh, [00:28:00] Nathen, I wanna go back and sort of talk about just sort of AI generally. You know, when we met at the DevOps Day Atlanta earlier, um, in 2025, I mean, I immediately connected with you, just our, your energy and your focus on learning.

And one of the things I. You know, was addressing too in, in my talk and so many of the others, is like, how do we embrace machine learning, but not in a way to diminish like the human. Aspect of our work. We’re still working in organizations. I think there’s a lot of fear out there that AI is gonna take over everything.

And I’d really like to hear your perspective on the concept of Jidoka actually from Japan or from Toyota, is about the automation with a human touch, right? And so it’s like how do we separate human work from machine work? Not to diminish humans, but. But to elevate them, and I think this has been true across all, you know, different technologies as it, you know, increased.

But even now, more so we haven’t before had hu like machine learning with human learning. So how, how are you and the [00:29:00] organizations you’re working with, how do we leverage ai, not to diminish humans, but to elevate humans.

Nathen: Yeah. It’s, it’s such a fascinating thing that we we’re seeing happening right now, and, and, and you see it like in so many different ways as well.

I think one of the, one of the most brilliant things about AI in this state that we’re in right now with AI is it is democratizing a lot of different functions. So like, I’m not a designer. I can go to AI and it can help me design a graphic or design a user interface so I can, as someone who’s not skilled in that thing, I have access to do that thing.

But the reality is, if I’m a designer, I can probably look at the thing that Nathen just created and know, uh, that was probably created by ai and, and, and you see the flaws. I think that the experts. Maintain that expertise and can use AI to sort of augment their abilities and, and maybe get things [00:30:00] done faster or differently, maybe even more fun.

The novices, I think it gives us, or, or the beginners, it gives us access to do that other thing. I think it’s really important though, as an organization that we recognize the difference between the two. Right. Um, oftentimes in software development, we talk about product managers who generally are not the ones writing the code, like building the systems, but with AI they can build a prototype very quickly.

Something that feels like it works, but the reality is, is it’s. It’s not really ready for real world use. It doesn’t have all of the like operational capabilities built into it. Things like reliability, security might not be there and so forth. It looks good and it kind of works. Uh, the, the fear is that you then just put that in front of customers and we’ve seen challenges with that.

Like you can’t, you can’t do that. So I think that, I think to like tighten it up here a little bit, it makes. New skills available and accessible to me, [00:31:00] but it does not make me an expert. And I think it’s, it’s really important that we recognize that and, but because it makes those new skills available to me, perhaps I can collaborate better.

With an expert and I can come like, instead of having my idea just in my head or in my words, like I can build something that will help express my idea in a much faster way and a higher fidelity that I can then go have that conversation with an expert around that thing.

Katie: No, that that’s great. And I, and I think if we go back to some of that burnout.

You can leverage AI to do some of the, if you have capability out there already to do some of the tasks that maybe would’ve taken you longer, but not just rely a hundred percent on what the AI said. Like, I, I personally have been leveraging ai, starting to learn my voice a lot better. To, you know, or pull out things from past articles I’ve written to like remind me of things or, Hey, I wanna write another article.

Can you pull out some themes from some other things I’ve written? And then I don’t just like that, just take what [00:32:00] AI did, but I use that as a starting point to give me ideas. And so it speeds up that like creation process. I’m still the one then. Using it to create, but maybe it’s doing a little research or some consolidation for me, and that speeds up my ability.

I mean, even for this podcast, the output at the end is not like what the AI said, but I help it. It helps me think about what are some of the key themes and how might I frame this up in the beginning and the end, and it just accelerates me getting to that end point faster, but it doesn’t replace me doing my thinking around it as well.

Nathen: Absolutely. It, it also because, uh, I don’t know, my experience is that working with AI tends to do a couple of things. One. It tends to be fun.

Katie: Yeah, great. I,

Nathen: I enjoy doing that. So that’s cool. But then also I think that you’re seeing sort of optionality open up, if you will. You might go try something that you maybe wouldn’t have tried before because you have AI now, and now you can kind of compare like, oh, I went down this path, which I might not have explored before versus this one that is tried and true.

Let me look at [00:33:00] the difference between these two and maybe take some inspiration from both to come up with a. Third option, that is the thing that I’m actually gonna ship to my customers or the thing I’m actually gonna do. So I love that. I think that’s super cool.

Katie: I do too. I say it helps like actually accelerate and elevate my creative thinking.

Nathen: Exactly.

Katie: That doesn’t replace it. We still need to have our humanness around it, but how can we leverage the AI and the technologies to help us get better at the things that we’re uniquely able to do And, and that also includes the human interaction. I mean, we’re still working together. As humans working with teams and other things.

And so that teamwork, that collaboration, the quote unquote soft skills of how we engage as, as human beings become even more important than maybe our technical skills.

Nathen: Right? And, and, and the AI can really help with that. I was talking with someone, a colleague, a, a, a little while ago, and they were telling me that they were learning this new domain and.

They, they felt super comfortable asking all of the dumb questions to the AI so that when they then [00:34:00] went and spoke to an, an expert in that domain, they would have smarter questions to ask and, and that collaboration could happen more seamlessly, and they wouldn’t feel like such a burden on the expert because they had done some due diligence.

They’d asked some dumb questions, and the AI, of course, has infinite patience. It can help guide you on that learning, I think. I think that’s really cool.

Katie: It’s a right, how does it accelerate the rate of learning and continuous improvement as well? Um, and before, like we come to the end here, I wanna, I wanna touch on what was our original, like very first conversation where we’re talking about how do you lead learning and development and organizations regardless.

Of it’s, you know, leveraging technology or whatever it is. And I mean, this kind of relates to how do you apply the findings of a report, but how do you take, create an experiential learning experience where you don’t necessarily know what the outcome is and there’s not a step-by-step path on how to get there.

Like, just like there isn’t a step-by-step [00:35:00] path of like how to take the findings from DORA and move your team from this legacy bottleneck to this high performing team. What are some of your insights on how. Change leaders and facilitators and, you know, whatever your role is in organization, get better at helping, helping move to those outcomes, move through that uncertainty.

Nathen: It, it, it, it is a, a super fun challenging space that we both get to work in. You know, we often say you, you need to take the findings of doen, contextualize them for your team, which. Absolutely accurate advice, but how? How do I do that? Right? And this is a place where someone, like a coach or facilitator can come in, someone that understands the research really well, understands your domain, and can help create, whether that’s through a value stream, mapping exercise, or through an open conversation, through asking a lot of open-ended questions and driving that dialogue across the team members like that becomes really, really important and a powerful skill.

To have. And that’s, you know, that’s, it’s some of the most interesting work [00:36:00] because as a facilitator, um, just like the participants in that workshop, you have no idea what the outcome is going to be. As facilitators, we can, you know, kind of project that it’s, it’s gonna be a good outcome. Uh, but it’s gonna be unique.

It’s not gonna be the same outcome that I had with the last team, even if the last team was. Heck, even if it was this team six months ago, we’re gonna get a different outcome this time by running through that same thing. Uh, so, so I think it is good for those facilitators to have, you know, a framework in mind for how they approach that problem.

And we’re gonna take you through this framework. We’re gonna take you through this experience, but it, it is a unique experience every time.

Katie: Right. And our role as facilitators are to help people go through this journey from here to there. And we know we want, they, there is to, to create next steps, right?

But we don’t necessarily know what those next steps are. Um, for listeners, I, I talk about how to become a more skillful facilitator in a past episode, and I’ll put links to that in the show notes. And, and Nathen’s saying the same thing, it’s about how do you. Create the roadmap and then [00:37:00] it’s the experience of going through it.

Just like we talked about earlier, like you can’t, someone can’t just read this report and say, I know exactly what we need to do. We need to actually collaborate, think about what’s our context, where are we today, where do we need to be? What are the gaps? And then think about the plan. So really using our problem solving mindsets, they are too, it’s not just a prescriptive, do this and this will happen.

Nathen: And I think it’s super important, like the, even the, the labels that we use, facilitator or a learning coach, like that’s what we are, we are not trainers, right? Uh, when we talk about training, that’s like, do step A, B, and C and you will get outcome number two, right? And, and like there, there is definitely a role for that, especially when we’re thinking about technology.

Like how, just gimme the basics of how this technology works, but that, that sort of training environment doesn’t. Always translate into actual effective adoption and effective use of a technology. I have to be able to use that technology or, or those lessons in my context in the daily work that I’m doing.[00:38:00]

And so we are here to help learn and, and the best thing about that, also a trainer. Knows everything about the lesson plan, when the, and, and what all the outcomes are gonna be. The minute they step in front of the room, a learning coach or a facilitator has no idea. So we’re, we’re literally learning together with the participants of that workshop.

To me, that’s, that’s the best part of the experience.

Katie: That. Well, that’s gets me so excited too when I come in and do, uh, help organizations with leadership retreats or a learning experience. It’s not like I’m gonna train you on how to ask effective questions. I mean, I might give you some tips and guidance, but let’s practice and let’s learn.

Or you need to solve a big problem in your organization. Well, let’s. Let’s break this down and let’s work together. My job is to help create the roadmap in the, the interaction and the reflection and the experience that’s gonna get them from A to B. But I can’t do it for them. And it’s exciting. Keeps it different every time.

Right. And that’s, and that’s the real value of also having an outside facilitator come in so that team members can actually do that [00:39:00] thinking themselves.

Nathen: Yes. And, and the other thing I always say about the DORA report is take our findings and use them as the hypothesis for your next experiment. Right.

Katie: I love that.

Nathen: And to me, like this also helps me because I know all of the findings like backwards and forwards. And, and now we can sit down as an organ, as a team and have a conversation like, we can use this finding. Alright, let’s turn it into a hypothesis instead of a statement, it now becomes a question. Right? And, and then we can immediately identify, Nope, that’s not for us.

These three are for us now let’s build an experiment around putting that hypothesis to a test. Yeah.

Katie: Yes. And is it working? Is it getting us from here to there? If not, why not? And this, and again, that cycle of, of learning, like just as you said, it gets back to the foundation of how do we get better at getting better through continuous learning and continuous improvement.

Nathen: Yes.

Katie: That’s awesome. So Nathen, what is your. What is, maybe not your top suggestion, but what is one suggestion for listeners, regardless of their industry, who [00:40:00] are looking to bring, you know, leverage AI to accelerate learning and their own effectiveness in organizations? Like what’s, what’s one thing that they should be focusing on right now?

Nathen: Can I, uh, can I give two?

Katie: Yeah, give two. Like what? Yeah. No, not just one. Yeah.

Nathen: My, my number one thing be, because you mentioned like with AI in particular, my, my number one thing, and this is true with AI or any technology, use it. Like just go play. Go play. Like, as children, we learn through play. We should be learning through play as adults.

And as you approach a new technology, think of it as you’re, you’re just gonna play with it and see, see what happens, right? So you have have to just go and experience the tool. And in experiencing it, you’re gonna learn more about. How it’s good, how it’s bad, how to, how to better interact with it and so forth.

Probably even more important than that, if we go sort of step outside of technology or, or maybe around technology, I think the most important thing that you can do is go have a conversation. Go have a conversation [00:41:00] with someone that you maybe don’t usually talk to, especially if that someone is the other that always introduces friction to the work that you have, uh, because.

I bet they don’t mean to introduce that friction. I bet they don’t even understand that they are. And the first thing you should do is not go talk to them about the friction they’re introducing, but go talk to them about what’s their name. What do they like to do outside of work? Like get to know them as individuals.

I think all too often there’s a lot of othering that happens within organizations and if we can stop that and, and first just get to know one another, it becomes much, much easier for us to get on the same page and drive towards the same goals and work together.

Katie: So powerful. We start with our humanness, right?

And then we can collaborate and work together and then leverage the technologies and the AI to, to do that. So powerful advice for whatever industry role you’re in. So thank you so much, Nathen. We could talk for hours. I encourage [00:42:00] everyone to go check out the video ’cause Nathen’s wearing his awesome.

Avocado shirt that he is famous for. Um, and also I am gonna put a link in the show notes to this incredible, very short talk that Nathen gave, um, at the DevOps Day Atlanta. I’m sure you’ve given it in other places as well, about this concept of collaboration and how, how you can leverage expertise but to create something better, um, together.

And that is an example from run DMC and Aerosmith in creating walk this way. So, um, it’s an awesome, it’s an awesome talk. And a powerful example. So, uh, go, go check that out in the, in the show notes. So, Nathen, how can people learn more about the DORA report so that they can take these findings and start having a conversation with their teams?

Nathen: Yeah, absolutely. I’m gonna give you three quick URLs. The first is DORA.dev. So that’s where we publish all of our findings and so forth. The next is DORA community. This is a community of practitioners and leaders and researchers come to coming together online and [00:43:00] sometimes in person to figure out how do we take these findings and put them into practice.

We’re sharing and learning with each other all the time. And then finally, because you said the word conversations, uh, the third URL, I’ll share as conversations. DORA.dev. And what that is is a rotating random list of open-ended questions. So the next time you go into a team meeting, pull up conversations, do DORA.dev, whatever question comes up, hit pause and use that as your icebreaker for the meeting.

Just talk up. Talk through that question. Uh, and, and, uh, I think you’ll find that’s really powerful.

Katie: That is so awesome. ’cause I’m all about asking better and more effective questions, so I love that prompt. Thank you Nathen for being here. I hope we get to see each other again soon. I always love our conversations in your energy and of course your avocado shirt.

Nathen: Thank you so much, Katie. This has been a blast.

Katie: One of the many things that stood out to me in this conversation with Nathen is that AI, like any technology or system, is an amplifier. A tool doesn’t inherently make us better. It makes what’s [00:44:00] already there more visible, more powerful, and sometimes more problematic.

The real power of ai. Lies in learning how to learn and in leveraging technology to do that more effectively. That connects with something I shared with Nathen and that I’ve been highlighting in recent keynotes and conversations with leaders about how AI fits with lean and operational excellence. The concept of jidoka automation with a human touch jidoka, one of the two pillars of the Toyota production system isn’t about removing people from the process.

It’s about designing systems that empower humans to do their best thinking and work to see problems, to stop when something’s off, and to free people to focus on higher value. More creative tasks. It’s about how we separate machine work from human work and how we use technology to elevate human capability, not replace it.

Well, jidoka traditionally focuses on leveraging machines to identify abnormalities. Ensure built-in quality. [00:45:00] We can apply the same principle more broadly using technology to enhance what humans can uniquely do best, and that’s the real opportunity in front of us. Now, AI can help us accelerate learning creativity and impact, but only if we stay intentional about the role that we as humans play in the system.

As you reflect on this conversation, consider these questions. Where in your work are you using AI or other technologies? And are they truly helping you get better at getting better? And as Nathen reminded us, are you having fun with it? Two, how might you design your systems so that technology supports the speed of learning in problem solving, not just the speed of delivery?

And third, finally, how are you ensuring that your human touch remains at the center of your leadership and your learning, and with your teams as well? Take Nathen’s advice and go have that human conversation with someone who might be introducing [00:46:00] friction into your work. Don’t start with the problem.

Start with connection, because when we lead with humanness, human connection, we create the foundation for true collaboration and improvement. Connection is the bond between our links in our Chain of Learning. It’s what allows us to grow and create impact. This conversation sparked ideas for you. You might also enjoy a few related episodes that go deeper into these themes, episode 13, about how to Break the Telling Habit, how to ask better questions that empower others.

Episode 40, how to become a skillful facilitator. How to lead collaborative learning and reflection as a group. And episode 44, how to master the coaching continuum, balancing your own expertise with developing that of others. All three, build on what we explored here today. Developing human skills that allow us to work with technology, not be driven by it.

If you’d benefit from support in creating your [00:47:00] roadmap to enhance learning, collaboration, and influence, I’d love to help. I work with change leaders, continuous improvement practitioners and executives like you as a facilitator, learning coach, and trusted advisor to strengthen the influence and relational skills that you need to amplify learning and continuous improvement across your organization.

You can learn more about how we can partner together to accelerate your success at kbjanderson.com, and the link is also in the show notes. And if you haven’t already, download my free Katalyst self-assessment. It outlines the eight key competencies that you need to master to be an impactful change leader, pairing your technical skills with the social and relational influence skills to become a real transformational leader.

From being a skillful facilitator to a transformational improvement coach, you can find it at kbjanderson.com/katalyst. That’s Katalyst with a K, and you can go back to episode nine to learn more about each competency. I always love hearing from you, so be sure to send a [00:48:00] message with me what you’re learning and valuing from these episodes.

And if this conversation resonated with you, please consider leaving a rating, review, or comment on your favorite pla. Form like Spotify, apple Podcasts or YouTube. It helps others discover the impact of Chain of Learning too. Thanks for being a link in my Chain of Learning. I’ll see you next time. Have a great day.

Subscribe to Chain of Learning

Be sure to subscribe or follow Chain of Learning on your favorite podcast player so you don’t miss an episode. And share this podcast with your friends and colleagues so we can all strengthen our Chain of Learning® – together.